Everyone that uses OpenCV is familiar with cv::Mat. Although some developers never heard about UMat class and its advantages.

The UMat class tells OpenCV functions to process images with an OpenCL-specific code which uses an OpenCL-enabled GPU if exists in the system (automatically switching to CPU otherwise). According to Khronos group OpenCL™ (Open Computing Language) is:

a royalty-free standard for cross-platform, parallel programming of diverse processors [..]. OpenCL greatly improves the speed and responsiveness of a wide spectrum of applications in numerous market categories including gaming and entertainment titles, scientific and medical software, professional creative tools, vision processing, and neural network training and inferencing.

OpenCV has had OpenCL implementations since its second version, but they weren’t simple and demanded a lot of adaptations to your code. Starting with the 3.0 version, OpenCV implemented the T-API (Transparent API), which adds hardware acceleration to a lot of “classic” functions. Since then, it has become able to detect, load, and utilize OpenCL devices and accelerated code automatically. So, you won’t need to implement any OpenCL code, it’s all “Transparent”.

It is possible to see that by looking at this piece of code for Mat processing:

1 2 3 4 5 6 | void MatProcessing(const std::string& name){ Mat img,dst; img = imread(name.c_str()); cv::cvtColor(img,img,CV_BGR2GRAY); bilateralFilter(img, dst, 0, 10, 3); } |

Then compare with its UMat equivalent:

1 2 3 4 5 6 | void UMatProcessing(const std::string& name){ UMat Uimg, Udst; Uimg = imread(name.c_str(), IMREAD_UNCHANGED).getUMat(ACCESS_READ); cv::cvtColor(Uimg,Uimg,CV_BGR2GRAY); bilateralFilter(Uimg, Udst, 0, 10, 3); } |

As you can see, it’s almost the same code, with Mat changed to UMat and a small adaptation to imread function.

What about the performance change?

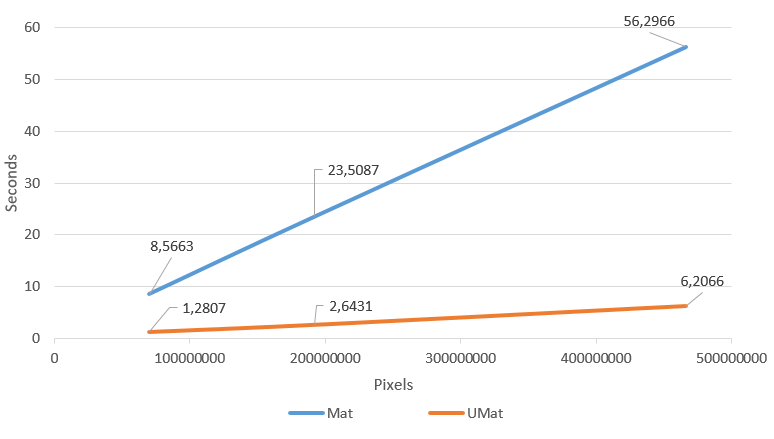

To see how UMat affects the performance, I coded a small program to find out what would be the gain here on my good ol’ i5 3330/Radeon HD 7970, the results are presented in Figure 1.

The gain is substantial, the UMat took approximately 10% of Mat’s time in all cases. You can try and measure by yourself on your PC, the code is on my Github. You will just need to install OpenCV with the flag WITH_OPENCL set as on for it to work.

Ok, why don’t use UMat everywhere?

This huge performance improvement is enough to make everyone ask: “Ok why don’t change all my Mats to UMats?”. Over the internet, this adaptation seems to be strongly recommended. However, Intel says:

Use the cv::UMat Datatype, But Not Everywhere. Use cv::UMat for images, and continue to use cv::Mat for other smaller data structures such as convolution matrices, transpose matrices, and so on.

Also, the conversion of big code bases will probably not going to be as trivial as it sounds. Each case is different, a deep analysis of the problem must be considered.

Have fun with your projects!

If this post helped you, please consider buying me a coffee 🙂

thank you this is very usefull