Testing machine learning models on mobile devices can be a time-consuming task in general.

The entire process of converting a model to tflite, integrating, and executing it can be time consuming, especially in the early stages of a project when we are primarily interested in runtime and memory consumption metrics, for example.

Any tool that simplifies this interaction is always welcome. In this post, I wanted to discuss and explain how to use the “Benchmark Model” tool from TensorFlow for tflite models. Through it, we can calculate a model:

- Initialization time and memory

- Inference times

- Overall memory usage

The best part is that it’s straightforward to execute, requiring only choosing the architecture (arm, x86, etc.), and pointing to the model you want to run.

Requirements for the tflite benchmark

To run this tutorial, you need to have ADB installed, as well as the model you want to test on the device already converted to the tflite format.

Let’s get started!

Next, download the desired version from the links below, following the correct architecture. For this example, I will run the “android_arm” version.

There are also executables for Linux, here are the links:

Let’s create a folder within the Android device using ADB, by running on the terminal:

1 | adb shell "mkdir /data/local/tmp/benchmark/" |

With the executable, let’s push it to the folder we just created:

1 | adb push android_arm_benchmark_model /data/local/tmp/benchmark/ |

Now, let’s push the model to the same folder to make it easier:

1 | adb push model_depth_test.tflite /data/local/tmp/benchmark/ |

It may happen that we don’t have privileges to execute the file, so it’s worth giving permission for that:

1 | adb shell chmod +x /data/local/tmp/benchmark/android_arm_benchmark_model |

Time for testing. Let’s run it with a basic set of parameters:

1 | adb shell /data/local/tmp/benchmark/android_arm_benchmark_model --graph=/data/local/tmp/benchmark/your_model.tflite |

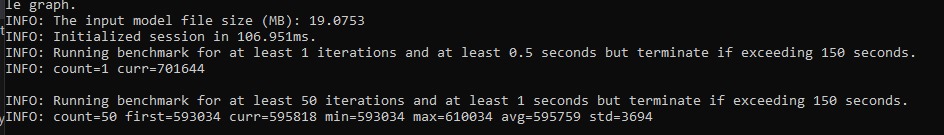

The important part of the output:

Great, giving us some statistics on the screen without the need to fully integrate it into an application.

There are a vast number of parameters we can modify to tailor the execution as needed, such as running on HVX, allowing float 16 precision loss, running on GPU… Among others. You can check the details directly on the tensorflow github.

Let’s again, now running on the GPU by adding --use_gpu=true to see the performance.

1 | adb shell /data/local/tmp/benchmark/android_arm_benchmark_model --graph=/data/local/tmp/benchmark/your_model.tflite --use_gpu=true |

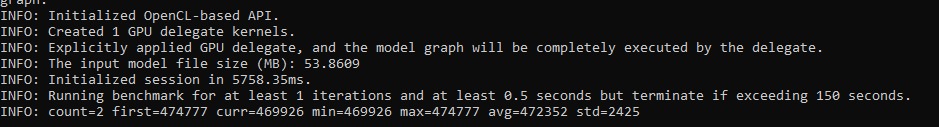

The output:

Great! As expected. GPU is way faster in inferencing but takes longer to initialize.

Conclusion

In summary, using TensorFlow’s “Benchmark Model” tool significantly simplifies testing machine learning models on mobile devices. With the ability to adjust various parameters, such as GPU execution, the tool provides a realistic view of the model’s statistics, making it a valuable ally in the early stages of mobile ML projects.

Have fun with your projects!

If this post helped you, please consider buying me a coffee 🙂