It’s usual that big, well-documented, and reliable datasets for training and testing some Machine Learning models are often hard to find.

Data augmentation (DA) is a concept that some (or all) data that is going to be used to train a Machine Learning model, will be artificially modified to generate more inputs.

When dealing with images, this task basically creates multiple alterations of each image through flips, rotations, rescaling, cropping, noise, and so on.

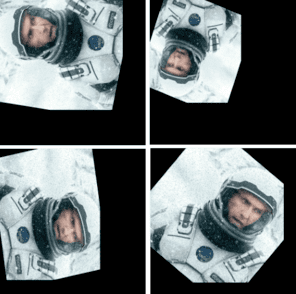

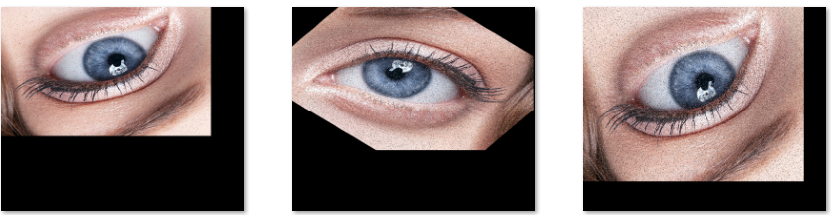

For example, consider this image:

And now, the same image after some geometric transformations.

For a Machine Learning algorithm, these images are considerably different and might provide additional information to the model.

Recently, I needed to train a very specific model using images I gathered by myself. So, I didn’t have enough data to do an acceptable training/validation procedure. That’s when I discovered Data Augmentation.

It’s not hard to notice that is DA is a very repetitive process and an automatic way of doing it would be helpful. Because of that, I decided to implement a script for doing the job. Now I decided to make it fully available on my Github.

Usage

The script was implemented in Python and can be used by the following pattern:

1 | dataAugmentation.py -i <imageName> -n <quantity> -r <randomizerLevel> -o <OutputFolder> -m <BorderMode> -w <noiseLevel> |

Parameters

- -i : Image name

- -n : The number of output images

- -o : The output folder

- -r : Specifies how aggressively the image will change in the output. Default = 20

- -m : Border mode: default=0 (cv2.BORDER_REPLICATE) use 1 to choose cv2.BORDER_CONSTANT

- -w : Noise level between 0 and 1, default=0

- -h : help

Feel free to use it and mail me the results! 🙂